Configuring your IDE Interpreter

Codegen creates a custom Python environment in.codegen/.venv. Configure your IDE to use this environment for the best development experience.

VSCode, Cursor and Windsurf

VSCode, Cursor and Windsurf

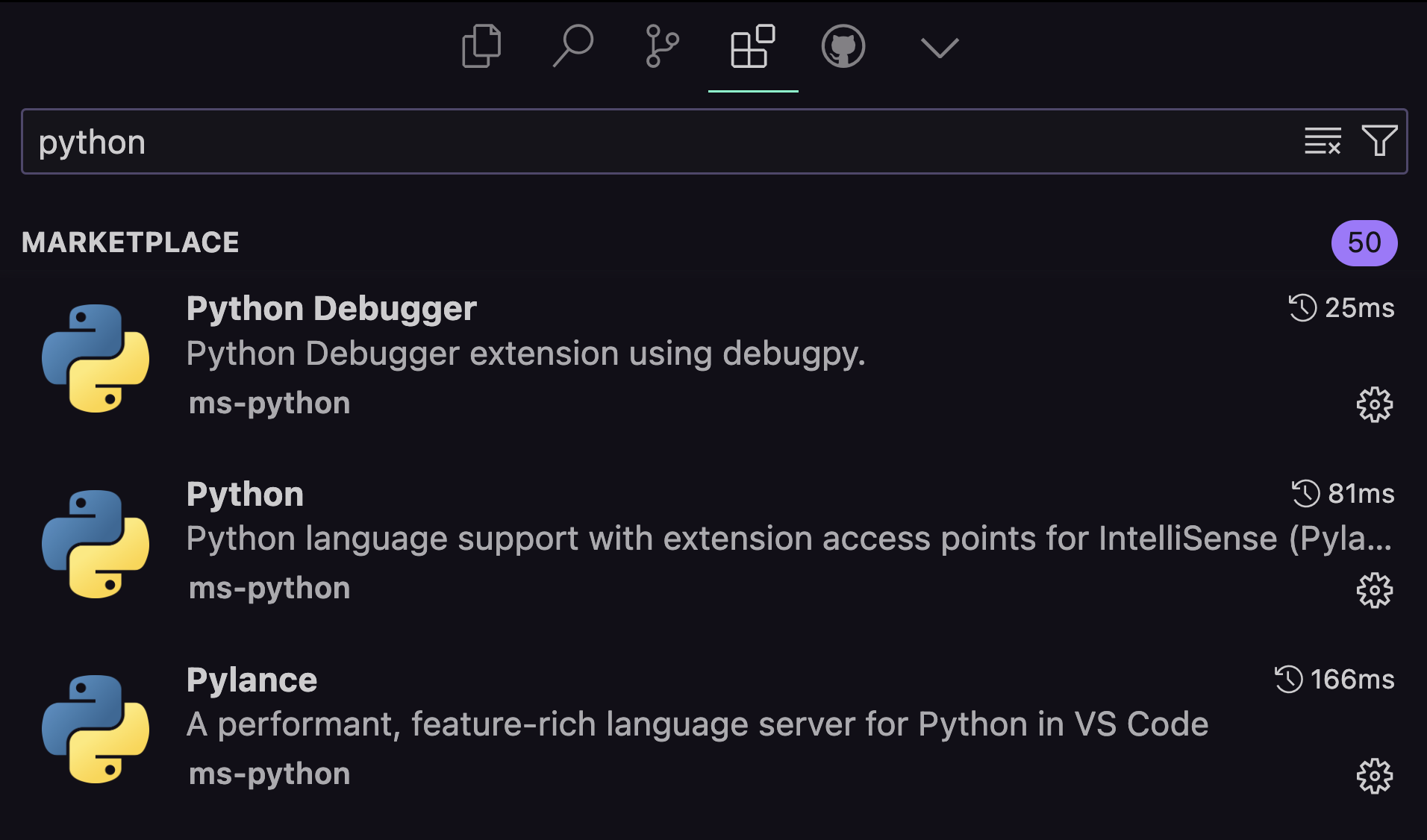

- Install the VSCode Python Extensions for LSP and debugging support. We recommend Python, Pylance and Python Debugger for the best experience.

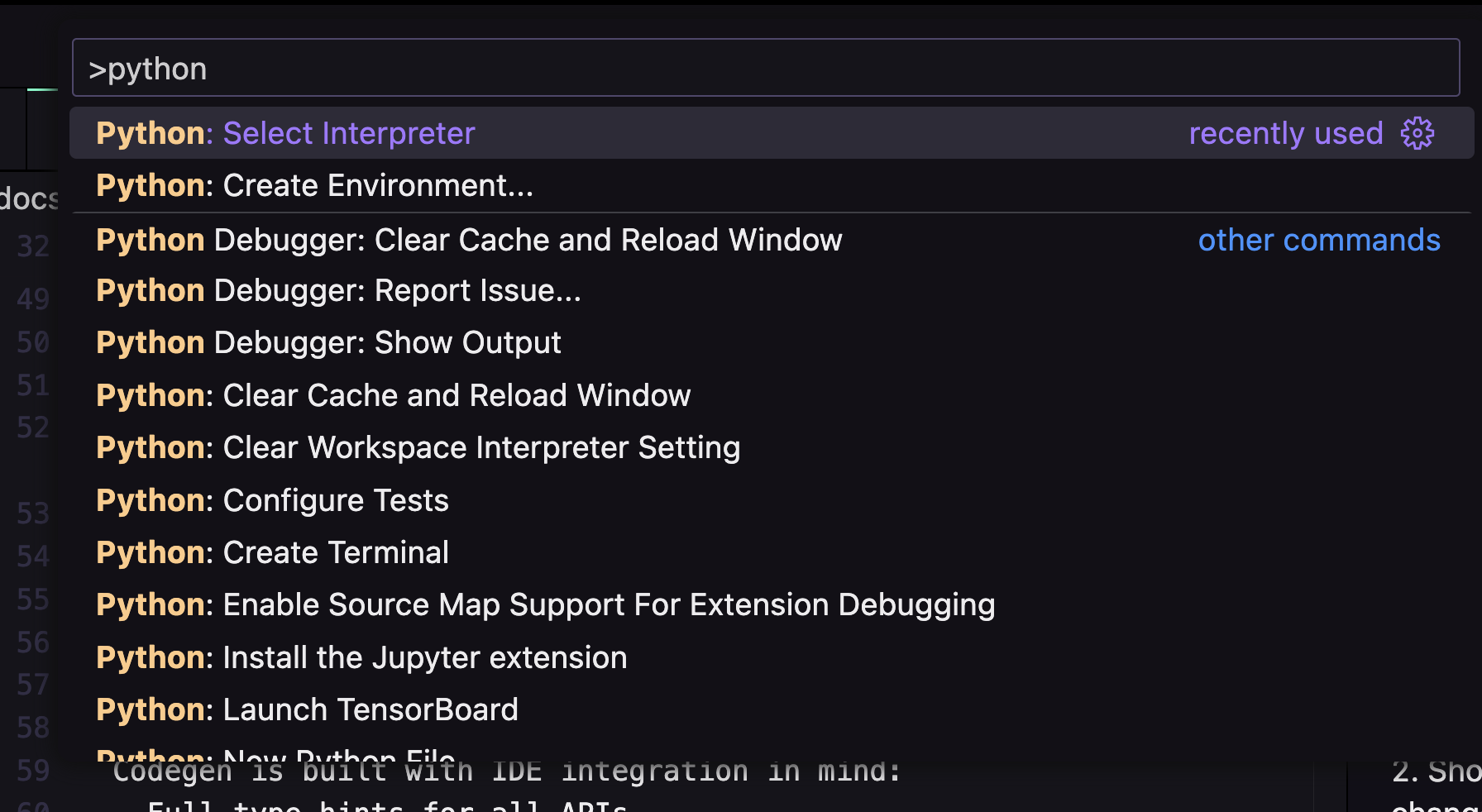

- Open the Command Palette (Cmd/Ctrl + Shift + P)

- Type “Python: Select Interpreter”

- Choose “Enter interpreter path”

- Navigate to and select:

.vscode/settings.json:PyCharm

PyCharm

- Open PyCharm Settings/Preferences

- Navigate to “Project > Python Interpreter”

- Click the gear icon ⚙️ and select “Add”

- Choose “Existing Environment”

- Set interpreter path to:

MCP Server Setup

This is an optional step but highly recommended if your IDE supports MCP support and you use AI Agents. The MCP server is a local server that allows your AI Agent to interact with the Codegen specific tools, it will allow an agent to:- ask an expert to create a codemod

- improve a codemod

- get setup instructions

IDE Configuration

Cline

Add this to your cline_mcp_settings.json:Cursor:

Under theSettings > Feature > MCP Servers section, click “Add New MCP Server” and add the following:

Index Codegen Docs

Cursor:

If you use Cursor you’ll be able to configure the IDE to index the Codegen docs. To do so go toSettings > Features > Docs

and then click on Add new docs. We recommend using this url to index the API reference:

Create a New Codemod

Generate the boilerplate for a new code manipulation program using codegen create:- Create a new codemod in

.codegen/codemods/organize_types/ - Generate a custom

system-prompt.txtbased on your task - Set up the basic structure for your program

The generated codemod includes type hints and docstrings, making it easy to get IDE autocompletion and documentation.

Iterating with Chat Assistants

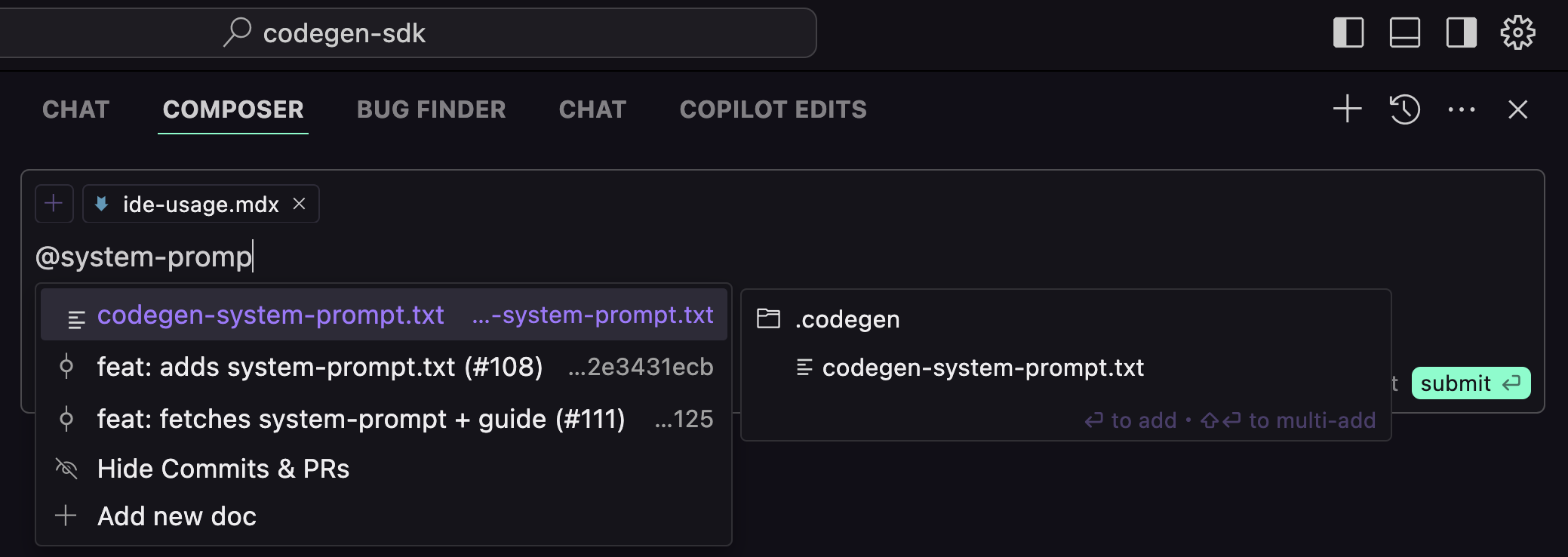

When you docodegen init, you will receive a system prompt optimized for AI consumption at .codegen/codegen-system-prompt.txt.

If you reference this file in “chat” sessions with Copilot, Cursor, Cody, etc., the assistant will become fluent in Codegen.

Collaborating with Cursor’s assistant and the Codegen system prompt

Collaborating with Cursor’s assistant and the Codegen system prompt.codegen/codemods/{name}/{name}-system-prompt.txt. This prompt contains:

- Relevant Codegen API documentation

- Examples of relevant transformations

- Context about your specific task